Coagulation Instruments and Reagents

Advances make coagulation even more useful

Recent advances in coagulation technology have resulted in greater capability, productivity, sensitivity, specificity, and ultimately, improvement in the clinical care of patients.

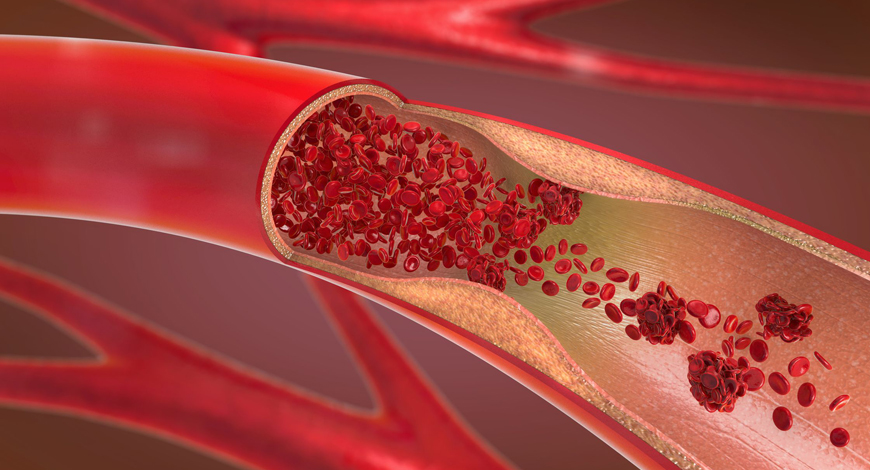

In recent years, blood coagulation monitoring has become crucial to diagnosing causes of hemorrhages, developing anticoagulant drugs, assessing bleeding risk in extensive surgery procedures and dialysis, and investigating the efficacy of hemostatic therapies. In this regard, advanced technologies, such as microfluidics, fluorescent microscopy, electrochemical sensing, photoacoustic detection, and micro/nano electromechanical systems (MEMS/NEMS) have been employed to develop highly accurate, robust, and cost-effective point-of-care (POC) devices. These devices measure electrochemical, optical, and mechanical parameters of clotting blood, which can be correlated to light transmission/scattering, electrical impedance, and viscoelastic properties.

Not all analyzers are created equal, as some smaller-capacity models may not have the fully automated features of some of the larger bench models for optimum convenience.

Automation may be a bonus to some and a necessity to others. Lesser-volume labs might be fine with small, manual bench analyzers that perform basic laboratory testing, such as PT, aPTT, FIB, and D-dimer. Intermediate labs may be interested in freestanding semi-automated analyzers that have a larger testing menu to include factor and hypercoagulation assays.

High-volume labs may enjoy the convenience of fully automated floor model analyzers, especially when connected to a total laboratory automation system that will spin tubes to separate plasma. Fully automated systems are known for their high-throughput testing and reagent capacity to help the lab increase its efficiency and turn-around-times.

When comparing coagulation analyzers, some systems are optical-based, while others are both mechanical and optical-based, depending on the assay type (clot-based vs. chromogenic vs. immunoturbidometric). If it is more sensitive, one can work up more patients. Both do the job, but both have bias.

Smaller companies might not have the technical support that the bigger companies offer, or their systems may not be engineered as well as the larger companies. The larger companies get more data for clearance from the FDA, Clearance of course does not guarantee the same performance as another brand.

Larger models have multiple technologies, such as having several wavelengths for coagulation, immunologic, and enzymatic testing. Those are things the bench and table models might not do, and they may not connect with an LIS system; whereas, larger models may have the computer capability of connecting with LIS.

Models with a computer board, instead of a personal computer, may require changing a system upon upgrade. Embedding is a major change, as it allows for FDA notification. Some standalone models may still be using Windows 7 or 10. The next generation of analyzers will have newer operating systems.

In 1905, Paul Morawitz (1879–1936) proposed a blood coagulation theory that has been classic. An excellent general physician, his interest in clotting led to clinical work in thrombosis and angina, and the use of quinidine for cardiac dysfunction. At the beginning of the twentieth century, the theory was that prothrombin was converted to thrombin under the influence of thrombokinase (thromboplastin) and calcium, whereas thrombin converted fibrinogen to fibrin. The mechanism for converting prothrombin-using tissue was called the extrinsic pathway. It was known that blood clotted without tissue, albeit rather less quickly. This was the intrinsic pathway and was much neglected for almost half a century.

In 1905, Paul Morawitz (1879–1936) proposed a blood coagulation theory that has been classic. An excellent general physician, his interest in clotting led to clinical work in thrombosis and angina, and the use of quinidine for cardiac dysfunction. At the beginning of the twentieth century, the theory was that prothrombin was converted to thrombin under the influence of thrombokinase (thromboplastin) and calcium, whereas thrombin converted fibrinogen to fibrin. The mechanism for converting prothrombin-using tissue was called the extrinsic pathway. It was known that blood clotted without tissue, albeit rather less quickly. This was the intrinsic pathway and was much neglected for almost half a century.

The discovery of coagulation factors and the enzymatic reactions that generate thrombin and fibrin, gave rise to the development of coagulation tests more precise to diagnose the defects of hemostasis. The discovery of heparin, oral anticoagulants, and the antithrombotics properties of the aspirin marked the way for development of more effective antithrombotic drugs. The techniques of separation of the fractions of the plasma allowed to prepare concentrated of coagulation factors and the knowledge of the genes that codify and regulate their synthesis have made possible their production by techniques of molecular biology. The perspective of the diagnosis and treatment of the hemorrhagic and thrombotic diseases is enormous and has made possible to apply more extensive and effective surgical techniques.

Ironically, he died of acute myocardial infarction two years after his first attack of angina and coronary thrombosis.

The main advantage of POC testing is the shorter time it takes to obtain a result. Typically, results may also be presented in a way that is easier to understand, but this is not always the case and results may still require a healthcare professional to interpret them safely.

POC testing can also be performed by people who have not had formal laboratory training. This includes nurses, doctors, paramedics, and testing by patients themselves. There are many kinds of near-patient testing, including malaria antigen testing, pregnancy tests, blood glucose monitoring, urinalysis, and many more. These tests often require relatively easy sample collection such as body fluids (e.g., saliva or urine) or finger-prick blood.

Together with other portable medical equipment, such as thermometers or blood pressure devices, they can facilitate rapid and convenient medical assessment.

POC approaches can also be more costly than laboratory-based testing. A study from 1995 demonstrated that the cost of POC testing for glucose was anywhere from 1.1 to 4.6 times higher than the same test in the laboratory. There are hidden costs that may often be overlooked, such as those associated with a quality control program or equipment upkeep. However, other kinds of hidden costs, such as buildings, staff, and overheads can apply to laboratory testing as well.

Nonetheless, the immediacy and convenience of POC testing can balance the increased costs. Rapid results can allow a treatment plan to be put into effect quickly, and where time is critical for better care, this can make a big difference.

Even where time is not critical and is more a matter of convenience, being able to move on with diagnosis and treatment is almost always of benefit to the patient. In some circumstances, a rapid result can help to allow a safe medical discharge from hospital, shortening the length of stay and helping to reduce costs of care. There are also examples of wearable monitoring and testing devices, which can decrease the rate of readmittance to hospital, by providing telemetry results to the clinic or information that patients themselves can use.

As technology continues to develop and POC testing devices continue to improve, the issues of accuracy associated with POC testing are likely to resolve. As more experience and understanding is gained with using POC devices, the benefits of quick results and ease of testing are likely to come to be seen as significant and desirable as well as routine.

A coagulation analyzer brings its greatest value to the laboratory when coupled with an extensive best-in-class reagent menu for both routine and specialty assays. Laboratories should determine the testing needs of their patient population, based on healthcare provider ordering requests. The coagulation analyzer should support a robust testing menu to prepare for the expanded testing needs. Reagent and consumable stability and quantity increase throughput and improve turn-around-times. Hands-on time required to maintain and run the analyzer should be aligned with the laboratory’s workflow and demand. Maintenance activities should be limited, and the system should provide full traceability for all end-user activities.

Some of the key features to look at while going for a coagulation or hemostasis analyzer include:

- Latest photo-optical clot-detection technology is better than photo-mechanical technology.

- No stirrers/bars should be needed to improve accuracy and reduce errors and costs.

- Lower reagent volume (e.g., 50uL for PT) should be considered as it significantly reduces cost per test, allowing patient to benefit from low charges.

- LED technology should be preferred over standard halogen lamps due to their long life.

- Touch-screen interface for user convenience is now a days the standard.

- Automatic optical start is highly desirable as a dedicated special pipette poses several operational and accuracy challenges.

- At least 10 sample and 3 reagent positions for incubation must be there.

- Results in INR, ratio, and quick percent are preferable.

- Maintenance-free instrument, with no moving parts, is considered better.

- Compatible with assays, such as D-Dimer, is particularly a useful feature.

- Memory of at least 100-patient results must be there.

- Optional printer connectivity should be there.

All available analyzers on the market are great systems; still, there may be questions worth asking when purchasing coagulation analyzers, such as, how are the analyzers helping the techs be efficient and mitigating erroneous results?

If the ultimate goal is maximizing and increasing productivity, testing, accuracy, and reliability, while decreasing diagnostic errors, another question worth asking is, how easy is it to run QC on the analyzer, and is it fully automated?

Take into consideration response time for on-site support, remote support through software application, as well as how it can improve customer satisfaction.

The labs really need to evaluate what challenges in their own labs need to be overcome, and how the right fit system helps mitigate those challenges and provide the best patient results and care.